Speeding Up Docker Builds for a Large Rails Monolith

Discover how Kajabi reduced Docker image build times for their large Rails monolith through strategic optimizations, modern asset compilers, and stateful build services.

We’ve been on a journey for the last 4 years to drive down the image build times for our large, nine-year old rails monolith. If you are familiar with the Rails ecosystem, then you know that asset compilation has gone through a number of iterations during that time. Like many organizations we’ve added new tools but have not removed others. Sprockets, Webpacker, and Vite have all been active at the same time. Even if your build is less complex, asset compilation is the long pole in the tent for building a Docker image of a large Rails app. In this article, we walk through our journey to improve build times.

First Steps from Slugs to Images

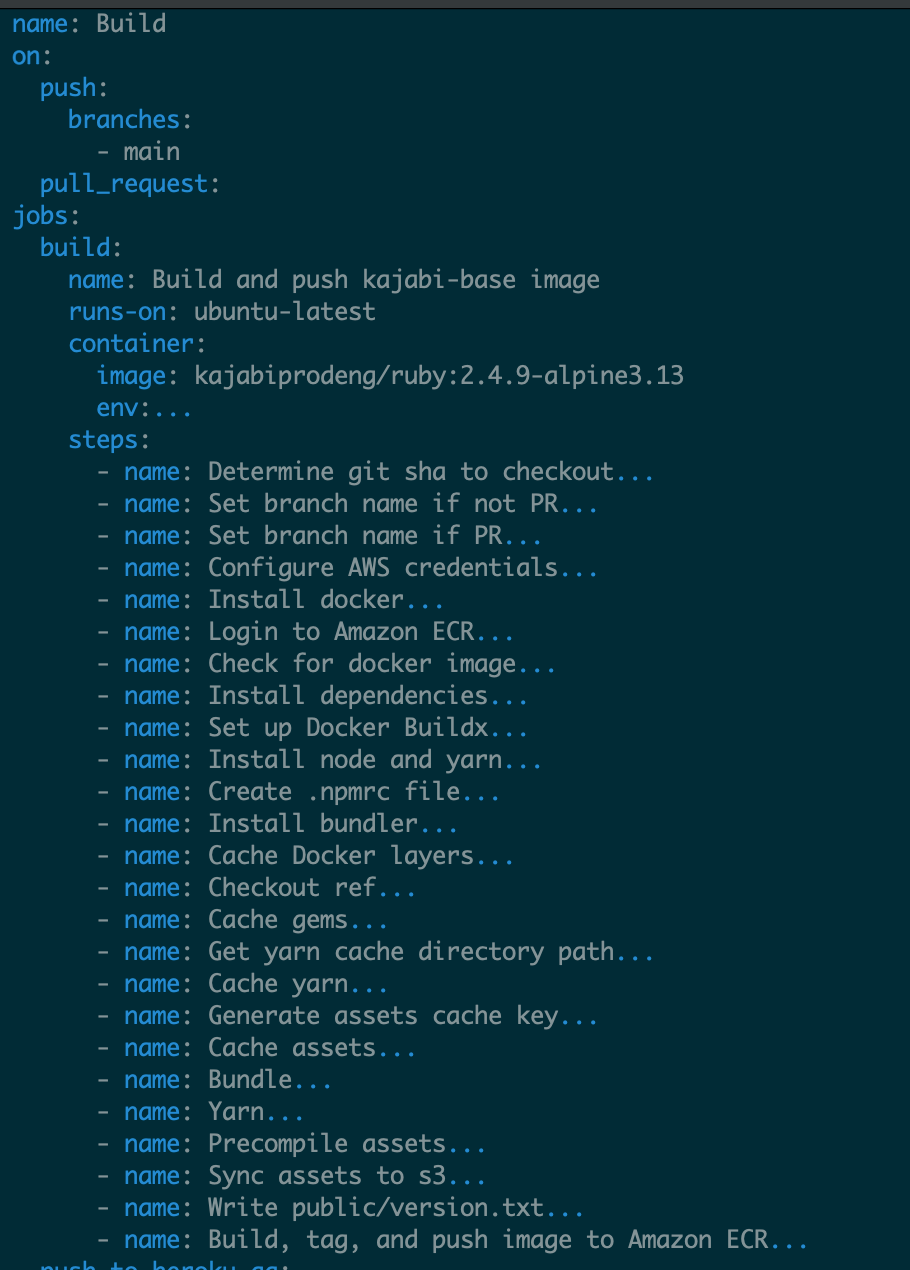

Kajabi was outgrowing Heroku when I joined in 2020. Our team was working through the migration to Amazon EKS. In order to simplify the deploy process we switched from using Heroku’s slug compilation to building a Docker image with our code and assets. This image could then be deployed in both Heroku and EKS as part of our dual deploys during the initial migration. Our first image build process was a Github Action workflow. It performed bundling, package management, and asset compilation within the Github Action runner. It then copied the gems, packages, code, and assets into the image as part of the build. Because gem bundling, yarn packaging, and asset compilation were done within the runner, we could use the actions/cache to speed up the build. The process worked, but was very specific to Github Actions. Asset compilation with Sprockets and Webpacker drove the image build times depending on how much caching could be used. The Dockerfile was simple, copying repo source files including vendored gems, and assets in the public directory.

In Heroku the dynamic creation of the slug would occur on push to our qa or staging environment. For production deploys, we reused the slug from one of the lower environments to skip the need to build the slug. In our migration, building Docker images occurs on pushes to pull requests and merges to the main branch to minimize waits on image creation.

BuildX and Multi-Stage Builds

In 2022 we decided to move our image build process fully into a Dockerfile. The rationale from our Architecture Decision Record (ADR):

- Make the build process more portable to avoid vendor lock-in

- Leverage Docker to have a repeatable build process that can replicated in any environment, including a developer’s laptop.

- Leverage the zeitgeist of tooling around Docker that seems more broadly applicable, and therefore easier to learn and hire for, than yet another bespoke YAML configuration.

Many of the steps referenced in our old GHA build process were now part of different stages in the Dockerfile’s multi-stage build. The pseudo-code below shows the various stages without getting into the details of each implementation. You can infer by name what each stage does. By separating out the stages you can let docker decide what it can run in parallel.

114:FROM ruby:${RUBY_VERSION}-alpine${ALPINE_VERSION} AS base

2204:FROM base AS builder

3250:FROM builder AS prod_builder_bundler

4262:FROM builder AS prod_builder_yarn

5284:FROM prod_builder_bundler AS prod_builder_assets_base

6305:FROM prod_builder_assets_base AS prod_builder_sprockets

7319:FROM prod_builder_assets_base AS prod_builder_webpacker

8335:FROM prod_builder_assets_base AS prod_builder_bootsnap

9344:FROM prod_builder_bundler AS prod_deploy_bundler

10356:FROM base AS prod_deployOur image build was still run in Github Action runners, but could also be run locally with Docker. Without caching these runs could take up to an hour, so definitely slower than a non-cached run in our previous configuration. Github Action runners are ephemeral and stateless, so the internal caching that Docker does to speed up local builds on your local machine is not available in a runner (without additional setup). The following sections detail some of the additional optimizations we did with both layer and Docker state caching below.

Layer Caching

Layer caching in Docker refers to the process of caching intermediate layers during the build of a Docker image. We use BuildKit through the use of the buildx plugin to add support for layer caching. This can significantly speed up the build process by reusing previously built layers. Our initial builds would store Docker state/cache for a given a given git commit SHA and then fallback to a recent cache hit.

- name: Cache Docker layers

if: ${{ steps.check-image-exists.outputs.value != 'true' }}

uses: actions/cache@v2

with:

path: /tmp/.buildx-cache

key: ${{ runner.os }}-buildx-${{ steps.gitsha.outputs.value }}

restore-keys: |

${{ runner.os }}-buildx-

# many steps deleted

- name: Build, tag, and push image to Amazon ECR

id: build-image

if: ${{ steps.check-image-exists.outputs.value != 'true' }}

env:

ECR_REGISTRY: ${{ steps.login-ecr.outputs.registry }}

ECR_REPOSITORY: ${{ secrets.ECR_REPOSITORY }}

IMAGE_TAG: ${{ steps.gitsha.outputs.value }}

run: |

# ISSUE: bundler plugins place items in the app config directory.

# We are copying the bundler config and plugin info into our context

# this is then copied from tmp to the appropriate location in the container

# within the Dockerfile.

# https://github.com/docker-library/ruby/issues/129

cp -R /usr/local/bundle ./tmp/

docker buildx build --cache-from=type=local,src=/tmp/.buildx-cache \

--cache-to=type=local,dest=/tmp/.buildx-cache \

--progress plain --load -f docker/Dockerfile -t $ECR_REGISTRY/$ECR_REPOSITORY:$IMAGE_TAG .

docker image push $ECR_REGISTRY/$ECR_REPOSITORY:$IMAGE_TAGWe then moved to a more detailed process of identifying the best cache layers for a given build:

# Find an ancestor ref that has a cache

- name: Determine Docker caching configuration

id: docker-cache

run: |

# On QA pushes and re-runs, the current SHA should already be built,

# so check if there's a cache for $KJ_CHECKOUT_SHA. On main, we want

# to try the parent of KJ_CHECKOUT_SHA so if the previous main build

# is finished, we can use it as a cache. Otherwise use the

# merge-base. It's okay if there's no merge-base cache either,

# BuildKit will log a warning but work fine if the cache ref isn't

# valid.

# docker manifest inspect doesn't support the OCI manifests exported

# by BuildKit's cache, so we have to curl the API

parentref="$(git rev-parse ${KJ_CHECKOUT_SHA}^)"

echo "parentref=$parentref"

githubbearertoken="$(echo ${{ secrets.GITHUB_TOKEN }} | base64)"

if curl --fail --silent -H "Authorization: Bearer $githubbearertoken" -H "Accept: application/vnd.oci.image.index.v1+json" https://ghcr.io/v2/kajabi/kajabi-base-cache-v2/manifests/$KJ_CHECKOUT_SHA >/dev/null; then

echo "::set-output name=from-tag::$KJ_CHECKOUT_SHA"

elif curl --fail --silent -H "Authorization: Bearer $githubbearertoken" -H "Accept: application/vnd.oci.image.index.v1+json" https://ghcr.io/v2/kajabi/kajabi-base-cache-v2/manifests/$parentref >/dev/null; then

echo "::set-output name=from-tag::$parentref"

elif curl --fail --silent -H "Authorization: Bearer $githubbearertoken" -H "Accept: application/vnd.oci.image.index.v1+json" https://ghcr.io/v2/kajabi/kajabi-base-cache-v2/manifests/${{ needs.config.outputs.merge-base }} >/dev/null; then

echo "::set-output name=from-tag::${{ needs.config.outputs.merge-base }}"

else

echo "::set-output name=from-tag::latest"

fi

- name: Build and push

uses: docker/build-push-action@v3

with:

build-args: |

ALPINE_VERSION=${{ env.ALPINE_VERSION }}

ARCH=${{ env.ALPINE_ARCH }}

ASSET_HOST=https://kajabi-app-assets.kajabi-cdn.com

BUNDLE_GEMS__CONTRIBSYS__COM=${{ secrets.BUNDLE_GEMS__CONTRIBSYS__COM }}

GITHUB_TOKEN=${{ secrets.GH_TOKEN }}

KJ_CHECKOUT_SHA=${{ env.KJ_CHECKOUT_SHA }}

context: .

file: ./docker/Dockerfile

push: true

tags: |

${{ steps.meta.outputs.tags }}

${{ steps.cache-meta.outputs.tags }}

labels: ${{ steps.meta.outputs.labels }}

cache-from: type=registry,ref=ghcr.io/kajabi/kajabi-base-cache-v2:${{ steps.docker-cache.outputs.from-tag }}

cache-to: type=registry,ref=ghcr.io/kajabi/kajabi-base-cache-v2:${{ env.KJ_CHECKOUT_SHA }},mode=maxThis specific determination of layers and its use in a subsequent build helps, but does not support the caching of granular files. For example, when an engineer creates a PR that updates a gem, a node package, or a source file (ruby or javascript) the invalidation of subsequent layers has a trickle down effect and would slow down the image build. For example, a PR that updates a javascript package, and modifies a single javascript file would force a new yarn install and asset precompile with webpack. The intermediate caches that yarn and webpack rely on to speed up their processes and the emitted files that are also used may or may not be as up to date as desired depending on the layers that were provided. We see this affect webpack asset compilation. This led us to the cache dance.

Cache Dance

Continuous integration (CI) runners, like those used for Github Actions, are ephemeral. The Docker build state is not stored for reuse between builds. Cache mounts like the following can be added; however, this leads to another issue.

The runner doesn’t store the state for this cache and Buildkit doesn’t allow controlling the cache mounts storage location. We initially used a workaround similar to others. Last year I came across the buildkit-cache-dance GHA and absolutely loved the name.

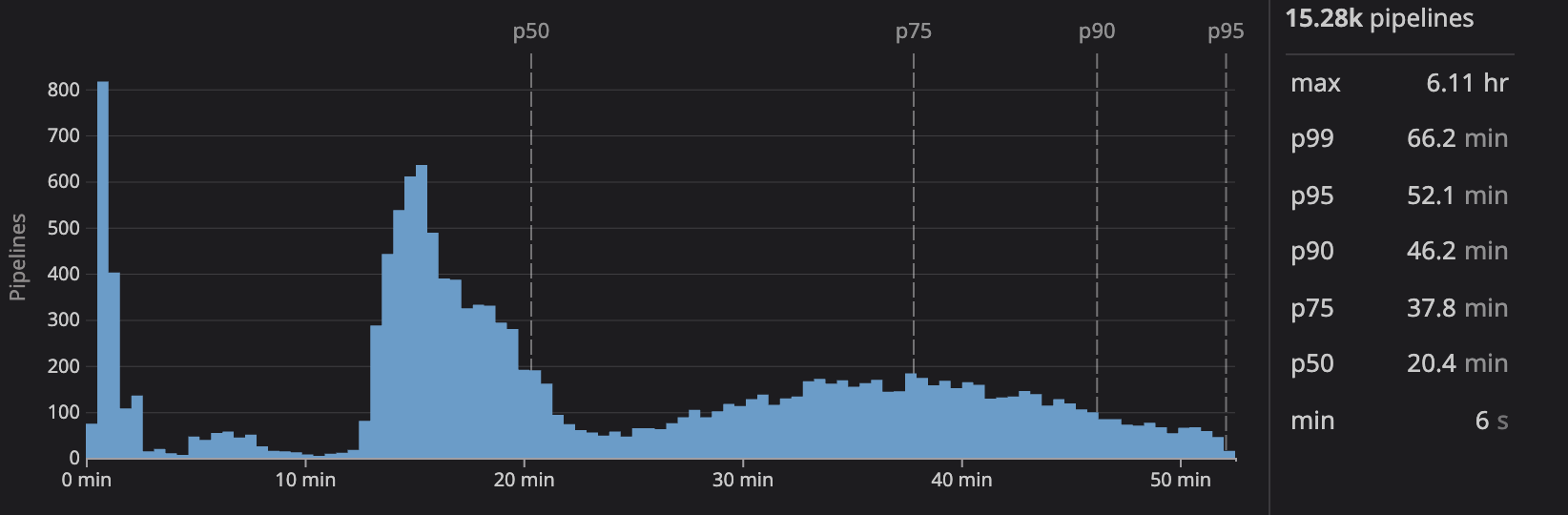

The “cache dance” in conjunction with layer caching significantly sped up builds. This cut down on worst case build times. The downside is the minutes spent importing and exporting caches to your remote cache location. Best case build times were less than 20 minutes, but worst case times were still 50 minutes.

Note: The extremely short duration builds are from cases where the image already existed, making the builds essentially a no-op.

Faster Build Runners

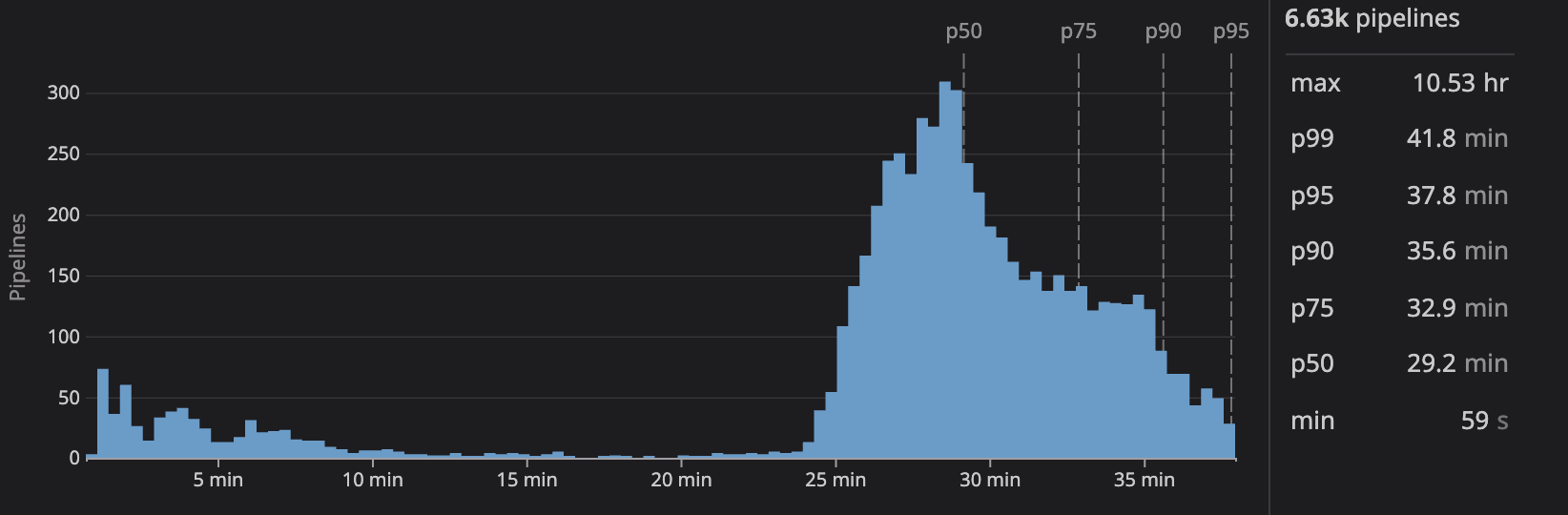

In 2023, in a separate effort, we made the call to migrate our CI suite to Harness. Because of this, it made sense to also migrate the image build. Harness build runners are larger, faster machines than the default Github Action runners, and we expected some speedups in the build. This switch was relatively straightforward due to the use of a Dockerfile for the build. During the move, we dropped the use of the cache dance in favor of the layer cache that was supported by Harness’s built-in components. It simplified our build process at the cost of a rise in median times, but a reduction in p75 and above durations.

If we were in the same position today, the availability of larger runners on Github would be an attractive option.

Stateful Build Runners

While at KubeCon 2023 I ran across the booth of Depot.dev. Their stateful build runners resonated with the problems that we were trying to address.

Depot's solution is to persist the cache on fast NVMe SSDs outside the AWS EC2 build instances. We run a Ceph cluster — an open-source, distributed storage system — to persist Docker layer cache during a build and then reattach that cache across builds.

Our Dockerfile was directly useable in Depot, and changing the Harness CI to call Depot was also straightforward.

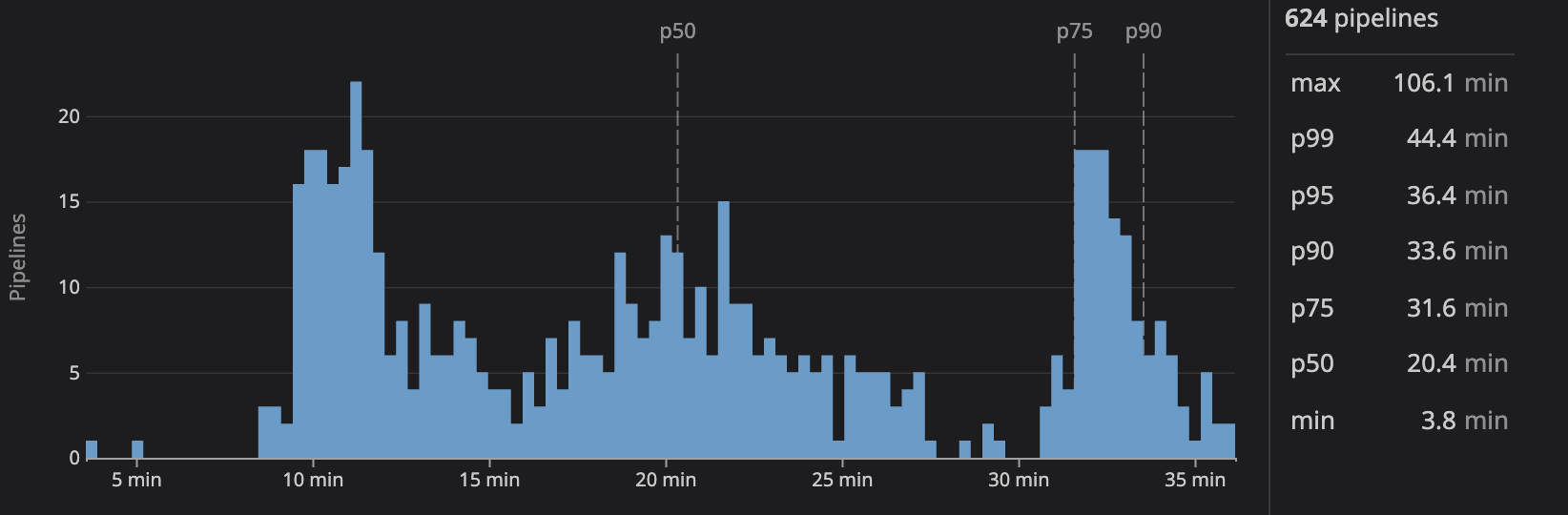

Based on budget and “commit to deploy” time considerations, we began to use Depot for builds off our main branch and for direct pushes to QA environments. The PR image builds were left out because the PR review cycle normally adds more time and is completed before pushing a PR branch to a QA environment. This move took 9 minutes off our p50 image build times.

Asset Pipeline Modernization

During our winter hackathon a group of developers migrated a number of areas within our monolith to use Vite. The lightning fast development server was a huge hit, and won the Engineering hackathon award. A decision was made to move forward with a full migration from Webpacker to Vite. Some additional context on our decision:

One of the main disadvantages to Webpacker is it has been retired after its five years of default use in the Rails ecosystem. Rails 7 has three new offerings for JavaScript, however, we are still on Rails 6. In addition, since Webpacker has been retired, it will not get support for Node 18, which is a hot-ticket-item on our list of implementation paths.

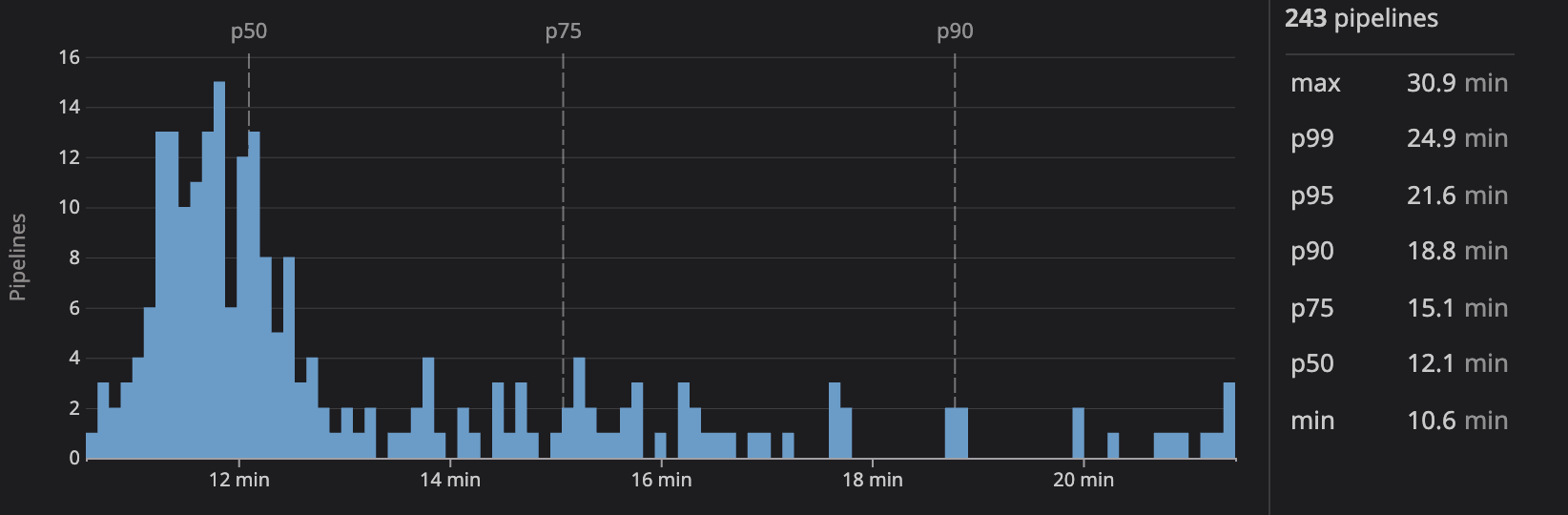

While not the driver for the change, we expected a faster build based on what we saw during the hackathon. With the migration to Vite nearly complete, this expectation has been met and surpassed. We see another 8 minute reduction in p50 build times and a whopping 19.5 minute reduction at p99!

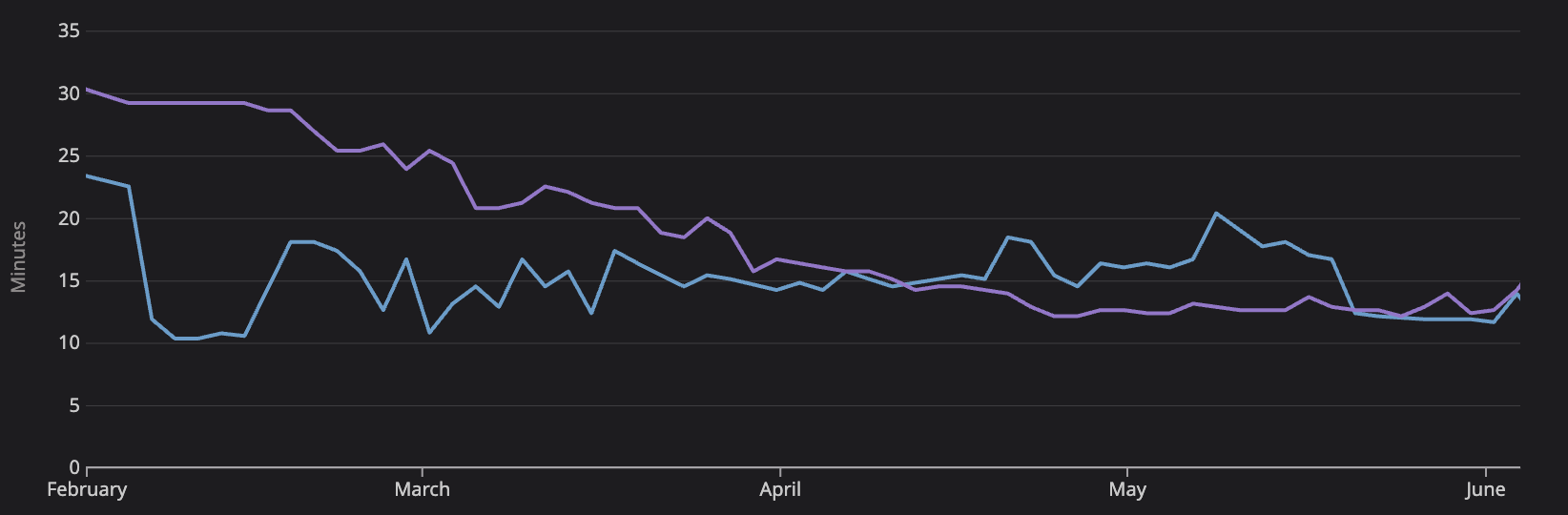

The following graph shows p50 times for the stateful versus stateless build runners as we migrated away from Webpacker to Vite.

The purple line are timings for the stateless build runner. The blue line is the stateful build runner. As we migrated away from the use of Webpacker to the use of Vite the stateless build runner becomes as fast as the stateful runner. As noted above the stateless build runner is being used for PRs and pushes to PRs, whereas the stateful runner is being used for merges to main and deploys to QA environments. Some observations from this data:

- Moving from Webpacker to Vite provides significant speedups whether you are using a stateful or stateless runner.

- The stateful build runner performed worse than the stateless runner from mid April to mid May until we adjusted the cache mounts that we were using for the Vite build.

- The two builds are slightly different. About 30 seconds of additional time is spent in the stateful build because a deploy is triggered for various environments that does not occur in the stateless builds. Additionally, the characteristics of some of the PR pushes are different than the merges to main. Many of the shortest runs for PRs are ruby spec changes which result in fully cached image builds, whereas merges to main to tend to include source changes that are not fully cached.

What’s Next?

Making our build process more portable by leveraging Docker had some initial hurdles, but has allowed us to move our image build quickly between various CI vendors. Over the last year and a half our p99 build times have decreased by 62% and our p50 build times by 41%.

- Stateful build runners for Docker CI builds improve the best case runs. Using beefier boxes for those runners provides an added boost. Using a service like Depot or Docker’s new offering is money well spent.

- Asset compilation is the primary culprit in image build time for large Rails projects. Your choice of how to build and bundle assets for asset compilation impacts build times. If you are still using Webpacker, then optimizing your configuration or migrating to newer tooling could significantly lower image build times regardless of the build runner type.

We strive for continuous improvement and the following are on our radar:

- We are planning to support multi-architecture images. Support for this with Depot is extremely simple. We only needed to add a --platform argument to each of our from stages for the Dockerfile, and then address some multi-arch gem issues within the app. Since Depot parallelizes the builds it should have a minimal impact on build times.

- We are considering a migration from the use of alpine for our base image to the use of debian. This may affect build times.

- We want to experiment with Depot’s Github Action runners.

- The image build step of our build pipeline takes approximately 50% of the time shown in the measurements above for our most recent builds. There may be some opportunity to optimize other portions of our build (e.g. syncing assets to our CDN).

Special thanks to Jake Smith, Kyle Chong , and Shane (Smitty) Smith for their work on the docker build and Vite migration over the past few years.